As AI continues to change in 2025, PHP developers are turning to powerful large language models (LLMs) to streamline workflows, automate repetitive tasks, and even brainstorm architectural solutions. But which models actually deliver the best results for PHP?

We’ve analyzed extensive developer feedback and paired it with the top AI models available today to help you choose the right LLM for PHP development.

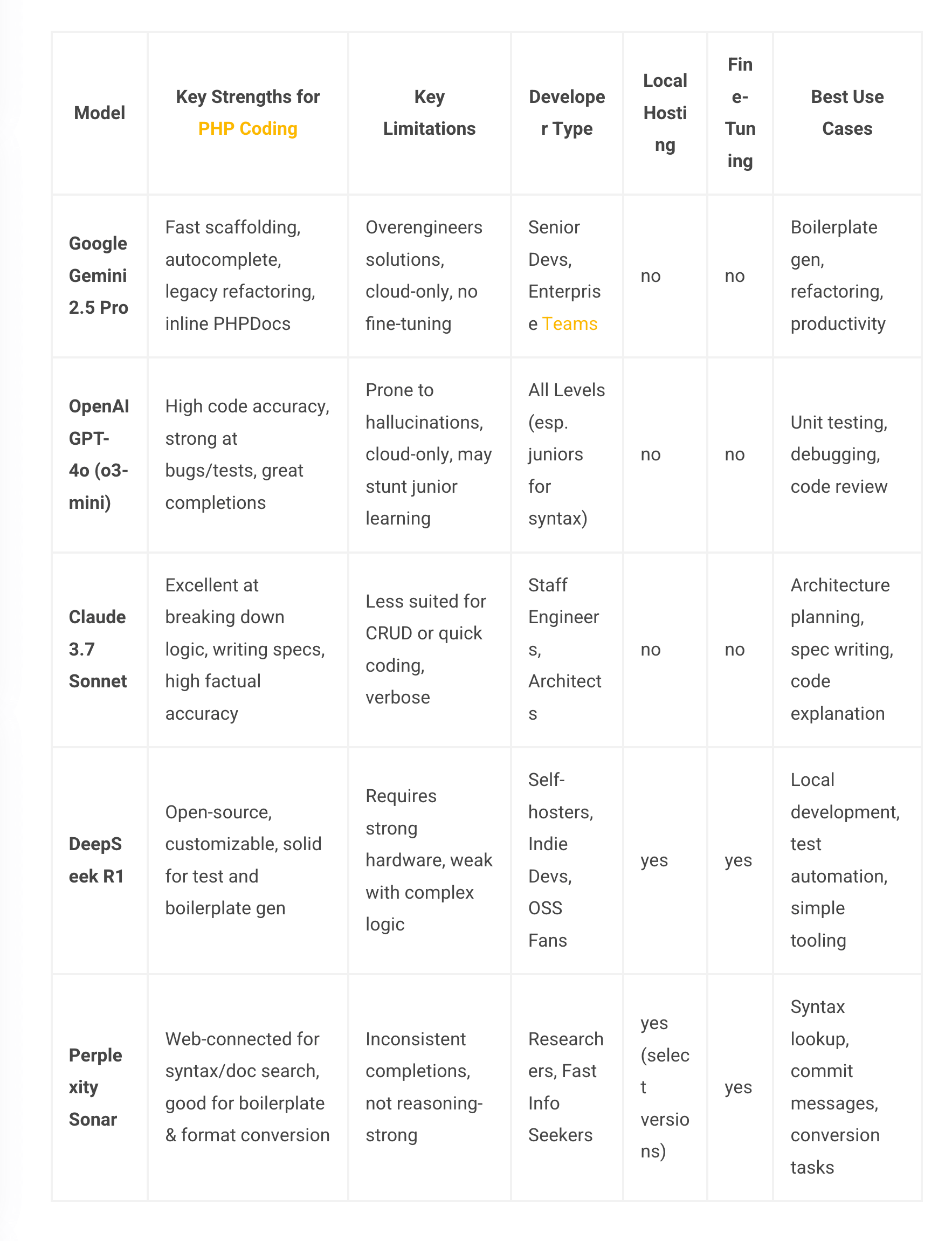

Best LLMs for PHP Coding in 2025 Summary Table

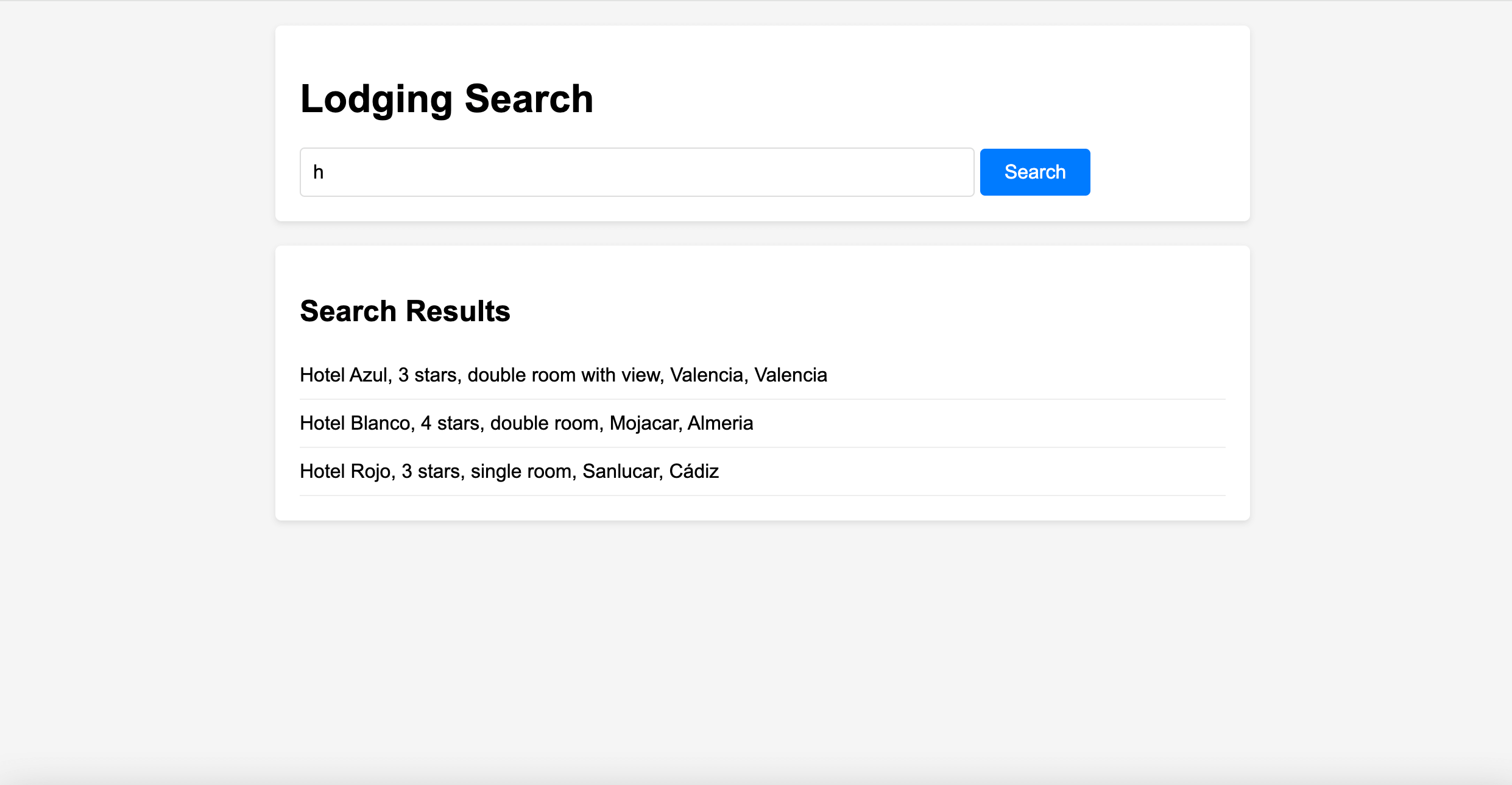

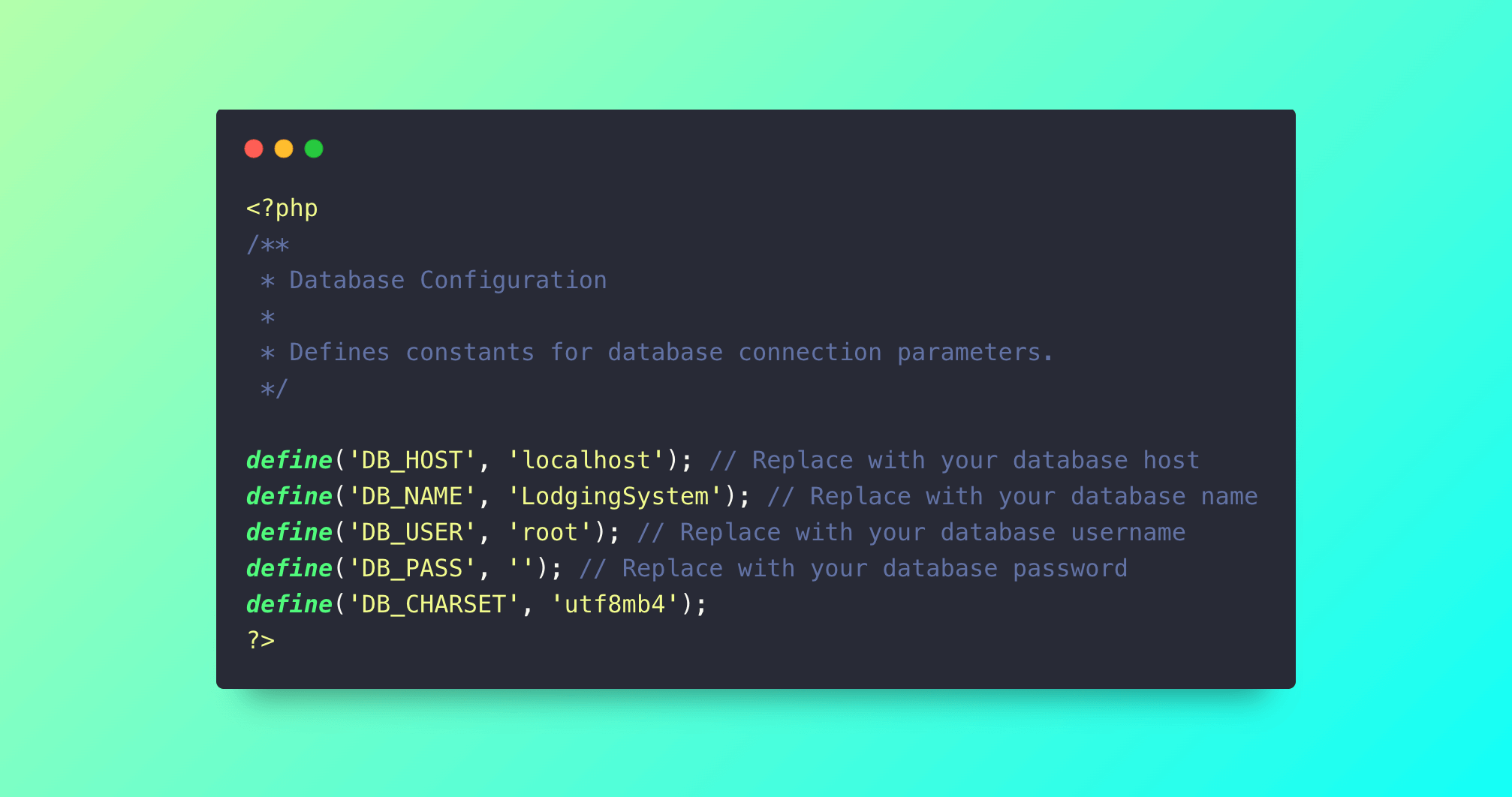

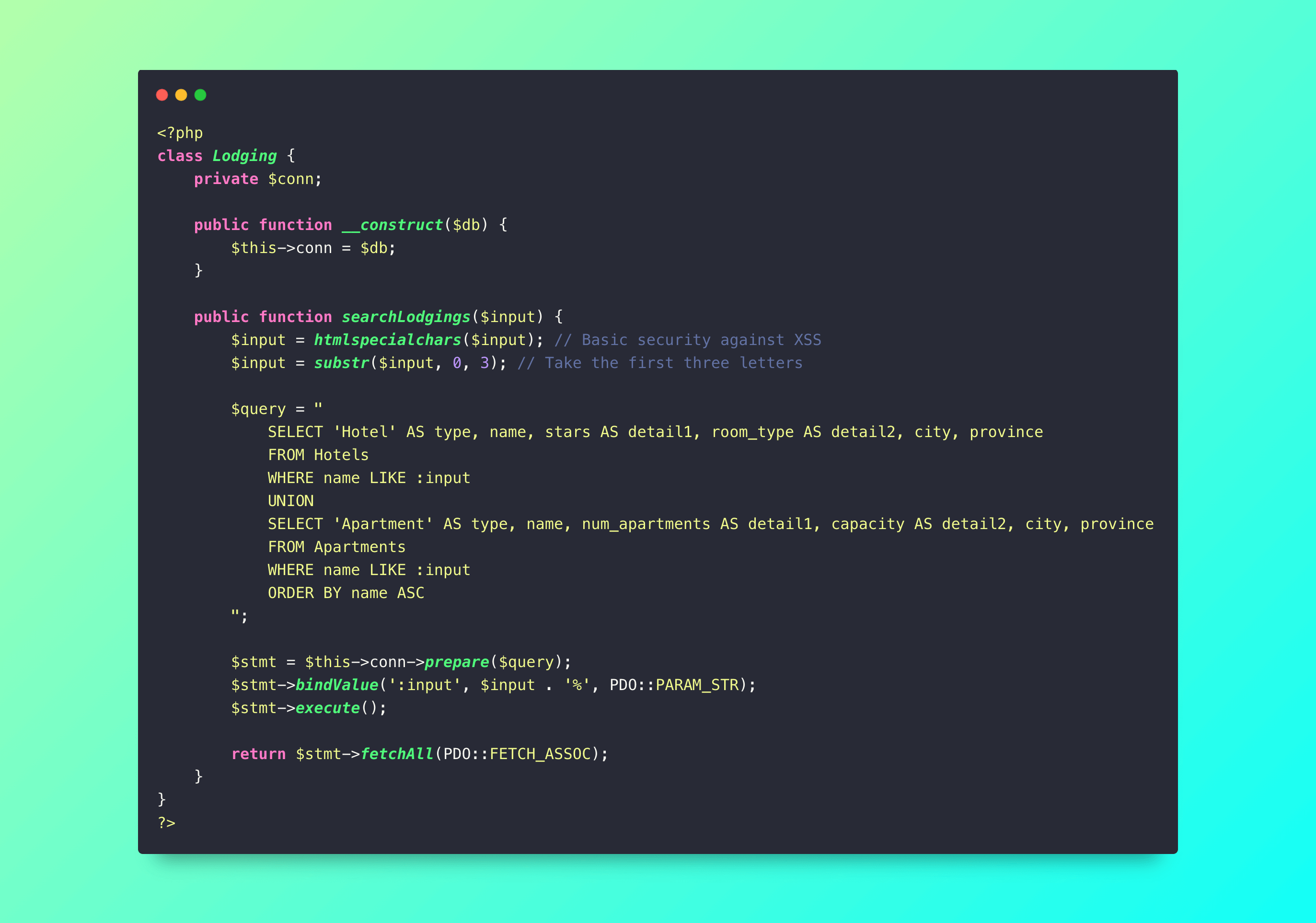

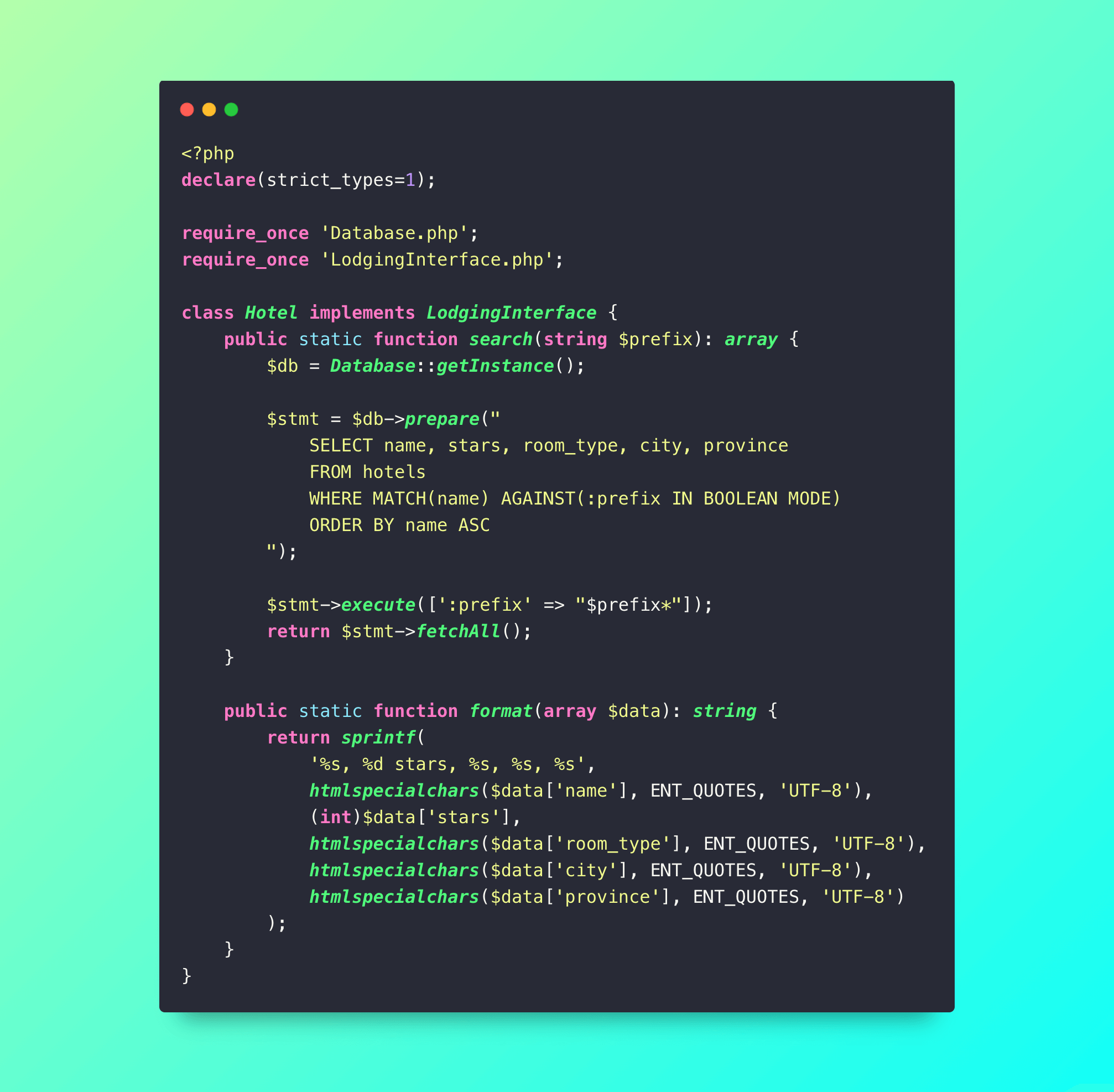

Create a lodging system to be used as an example

1. Google Gemini 2.5 Pro

Developer: Google DeepMind

Release Date: March 2025

Parameters: Estimated 500B+

Context Window: 1 million tokens

Knowledge Cutoff: December 2023

What is it?

Gemini 2.5 Pro is Google’s most advanced multimodal LLM with support for code, image, and video. It integrates deeply into Google Workspace and is used across productivity and development tools.

For PHP Coding

In PHP development, Gemini is praised for automating repetitive tasks, such as scaffolding boilerplate code, PHPDoc generation, and wrapping functions. It’s especially handy for refactoring legacy code, which is a recurring pain point for PHP devs. Its autocomplete capabilities can save time, but devs caution that it may promote over-engineered solutions, echoing feedback that Gemini sometimes “writes more code than needed.”

Reasons to Use:

Strong in generating basic PHP structures and CRUD logic

Ideal for experienced devs who need speed and automation

Works well for prototyping, generating commit messages, and summarizing tasks

Reasons to Avoid:

– May produce complex or redundant solutions for simple tasks

– Not ideal for architecture decisions or nuanced business logic

– Needs frequent manual review for accuracy and security

2. OpenAI GPT-4o

Developer: OpenAI

Release Date: January 31, 2025

Parameters: Estimated 100B+ (o3-mini); smaller o1-mini also available

Context Window: 128,000 tokens

Knowledge Cutoff: October 2023

What is it?

GPT-4o is the third model in OpenAI’s “o” series. It’s fast, responsive, and cost-efficient, with toggles between variants like “mini” and “mini-high.” While it lacks vision, it performs well on logical tasks and coding prompts.

For PHP Coding

GPT-4o (o3-mini) was noted as a top performer in coding competitions, and it’s one of the few models that can outperform average coders. Devs use it for auto-generating PHP unit tests, scaffolding apps, and finding subtle bugs like off-by-one errors. It’s also a popular “rubber duck debugger”, helping clarify logic.

Reasons to Use:

Top-tier for refactoring, debugging, and testing

Strong at handling subtle syntax and logic issues

Ideal for juniors needing syntax help or seniors validating edge cases

Reasons to Avoid:

– Prone to hallucinations, especially when over-trusted

– Can weaken long-term dev skill through over-reliance

– Not locally hostable or fine-tunable, cloud only

3. Claude 3.7 Sonnet

Developer: Anthropic

Release Date: April 2025

Parameters: Undisclosed (mid-tier in Claude 3 lineup)

Context Window: 200,000 tokens

Knowledge Cutoff: August 2023

What is it?

Claude Sonnet is Anthropic’s reasoning-optimized model, often used for structured analysis, policy writing, and code explanation. It’s known for factual accuracy, long-context reliability, and detailed reasoning.

For PHP Coding

Claude 3.7 Sonnet is favored by devs who use LLMs for writing specifications, reasoning through architecture, or understanding complex codebases. Devs rely on it for breaking down complex logic and reviewing large code spans, making it ideal for code review, narrative explanations, and test planning.

Reasons to Use:

Strong at breaking down complex PHP logic

Great for documenting and explaining code

Helpful in planning architecture or suggesting test cases

Reasons to Avoid:

– Tends to repeat or over-describe code

– Doesn’t perform well on hands-on coding tasks (e.g., CRUD generation)

– No open-source or local deployment options

4. DeepSeek R1

Developer: DeepSeek

Release Date: March 2024

Parameters: 236B

Context Window: 64,000 tokens

Knowledge Cutoff: Late 2023

What is it?

DeepSeek R1 is a large, open-source model with strong performance in code tasks. It’s a rising favorite for devs who want fine-tuning capabilities, local hosting, and full transparency in model weights.

For PHP Coding

DeepSeek is used by devs running Ollama with Phi-4, especially for tasks like test automation, scaffolding, and wrapping methods. It’s effective for simple, high-frequency tasks like writing data access layers or converting legacy code, though not ideal for full-stack architecture or real-time suggestions.

Reasons to Use:

Fully open-source and locally hostable

Works well with self-hosted dev tools (e.g., Ollama)

Useful for repetitive coding and basic PHP test generation

Reasons to Avoid:

– Requires powerful hardware for large models

– Not specifically optimized for coding (despite good performance)

– Accuracy dips with complex design problems

5. Perplexity Sonar

Developer: Perplexity AI

Release Date: Early 2024

Parameters: Not publicly disclosed

Context Window: Estimated 128K+

Knowledge Cutoff: Near real-time (web-connected model)

What is it?

Sonar is the core engine powering Perplexity’s AI search experience. It combines LLMs with real-time web access to provide source-verified answers, making it more of a search engine replacement than a dev co-pilot.

For PHP Coding

Sonar is useful for syntax lookups, code documentation, and quick conversion tasks (like data format translations). It’s not ideal for deep logic or architecture, but it’s excellent for spot-fixing, research, and code summarization.

Reasons to Use:

Real-time answers from web + code generation

Great for PHPDocs, commit messages, and boilerplate

Open models available for self-hosting and tuning

Reasons to Avoid:

– Not coding-specialized; better at research than reasoning

– Hardware demands for larger models

– Quality of completions varies depending on prompt clarity

What Developers Love About AI Coding Assistants

1. Huge Time Saver for Repetitive Tasks

LLMs excel at:

Autocompleting boilerplate code

Writing PHPDoc comments

Generating unit tests

Creating commit messages

2. Great for Brainstorming and Prototyping

Many developers use AI to:

Scaffold new projects

Draft quick implementations

Explore different design patterns

Discuss architecture tradeoffs

3. Syntax Help and Refactoring

LLMs are helpful when you:

Forget exact function names

Need assistance with unfamiliar libraries

Want to clean up or restructure code

Where AI Still Falls Short

1. Complex Tasks Confuse It

LLMs struggle with:

Multi-file architecture

Legacy PHP systems

Tasks that lack clear training examples (like FrankenPHP or obscure setups)

2. They Often Hallucinate

Even top-tier models sometimes:

Invent functions

Misuse syntax

Recommend suboptimal solutions

3. Can Degrade Developer Skill Over Time

Over-reliance may:

Weaken debugging and research skills

Lower overall code quality

Limit knowledge-sharing within teams

Feedback from the PHP Dev Community

Here’s how developers describe using LLMs:

“AI is amazing for boring, repetitive stuff, but it’s like a junior dev that keeps needing supervision.”

Senior PHP Developer

“Great for syntax and automation. But don’t trust it to handle architectural decisions.”

CTO at mid-sized SaaS company

“I use it to kickstart projects. It’s like digital ‘lorem ipsum’, gives me something to shape.”

Solo indie dev

Comparing the LLMs for PHP Coding

Let’s break down which LLM fits which use case:

Visual: Use-Case Mapping Table

| Task | Best LLM | Notes |

|---|---|---|

| Autocompletion | Gemini 2.5 Pro, GPT-4o | Especially effective in IDEs |

| Code Refactoring | Claude 3.7 Sonnet, DeepSeek R1 | Great with tests & legacy code |

| Architecture Planning | GPT-4o, Claude 3.7 Sonnet | Excellent reasoning abilities |

| Quick Syntax Help | Perplexity Sonar | Best for narrowing docs/search |

| Documentation Generation | GPT-4o, Claude 3.7 | Clear, structured output |

| Repetitive Task Automation | Gemini 2.5 Pro, Mistral | Good for boilerplate |

| Legacy Code Conversion | Qwen 2.5 Max | Effective with outdated patterns |

Which LLM Is Right for You? Tailored Picks Based on Your Role and Needs

Choosing the best AI coding assistant isn’t one-size-fits-all. Your experience level, coding environment, and project complexity all influence which LLM will serve you best. Here’s a breakdown to help you decide, with clear benefits for each type of developer:

If you’re a senior developer looking to automate and architect

Recommended:Claude 3.7 Sonnet or GPT-4o

Why it matters:

As a senior dev, you’re expected to deliver clean, scalable solutions fast. Claude 3.7 Sonnet excels at reasoning-heavy tasks, generating architecture blueprints, writing specs, and validating code design. GPT-4o, on the other hand, brings robust autocomplete, deep context awareness, and even helps draft technical documentation. These tools don’t just automate, they collaborate.

Benefit for you: Spend less time on boilerplate and more on solving big-picture problems, while ensuring that the logic behind your designs holds up under scrutiny.

If you’re a solo dev or indie builder

Recommended: Gemini 2.5 Pro or Qwen 2.5 Max

Why it matters:

Flying solo means juggling everything, from front-end tweaks to back-end logic to deployment. Gemini 2.5 Pro is fantastic at auto-generating UI elements, CRUD scaffolding, and filling in code gaps in real time. Qwen 2.5 Max stands out for handling legacy PHP refactors and transforming outdated code into modern, structured components.

Benefit for you: These LLMs free up mental space, reduce grunt work, and help you build production-ready prototypes faster, perfect when time and resources are tight.

If you’re working within frameworks like Laravel

Recommended: DeepSeek R1

Why it matters:

DeepSeek R1 has demonstrated exceptional contextual awareness within popular PHP frameworks, especially Laravel. It understands common routing, migration, and Eloquent ORM patterns better than many general-purpose models.

Benefit for you: You’ll get cleaner, more accurate code completions and suggestions tailored specifically to your framework saving hours of documentation reading or Stack Overflow digging.

If you’re in research or documentation-heavy environments

Recommended: Perplexity Sonar

Why it matters:

When you’re navigating multiple sources, writing internal wikis, or comparing PHP library docs, Perplexity Sonar shines. It’s designed to retrieve specific, relevant information and condense it into useful answers or summaries.

Benefit for you: You’ll spend less time switching between browser tabs and more time focused on your actual work, whether it’s documenting a codebase or figuring out why a PHP function behaves a certain way.

If you’re looking for lightweight, fast performance

Recommended: Mistral

Why it matters:

Sometimes you just need quick completions or automation for small, well-defined tasks, without the overhead of a massive model. Mistral is optimized for speed and efficiency, making it ideal for local environments, edge devices, or quick iterations.

Benefit for you: You get the benefit of AI-enhanced coding without sacrificing performance, especially when working in constrained systems or less powerful machines.

Each of these LLMs has its own strengths, and by matching the right one to your workflow, you unlock both speed and confidence in your PHP development process. Whether you’re building, refactoring, researching, or just getting unstuck, there’s a model that meets you where you are.

Tips for Using LLMs Effectively in PHP (And Why They Matter)

Using AI to assist your PHP coding can be a game-changer, but only if you use it wisely. Here are four expert-backed strategies to get the most out of LLMs while still growing your core developer skills:

1. Be specific with your prompts

Example: Instead of asking “Can you help with my Laravel app?” ask:

“What’s wrong with this Laravel route returning a 404 on a named route?”

Why it matters:

LLMs perform best when they’re given clear, focused problems. Vague or overly broad prompts lead to generic or incorrect code that doesn’t help much. By asking targeted questions, you’re more likely to get a usable, relevant response on the first try, saving time and frustration.

Benefit for you:

Less guesswork, better answers, and faster results. You also learn how to frame technical problems more clearly, a skill that improves your communication with both AI and human teammates.

2. Validate everything

Rule: Never trust AI-generated code blindly. Always test, review, and understand the output.

Why it matters:

LLMs are trained to predict what looks right, not necessarily what is right. They can hallucinate functions, skip crucial logic, or use outdated practices, especially in PHP where legacy patterns still linger online.

Benefit for you:

You avoid introducing hidden bugs, security vulnerabilities, or technical debt. Reviewing AI-generated code also sharpens your debugging and testing skills, critical for writing production-ready apps.

3. Don’t skip learning

Tip for juniors: Use AI as a supplement, not a crutch. If you rely on it for every task, you’ll miss key fundamentals.

Why it matters:

AI might give you an answer, but it doesn’t explain why it’s correct, and that’s where real learning happens. Especially for junior devs, skipping this step can lead to a shallow understanding of PHP concepts, which becomes a problem in interviews, peer reviews, or when something breaks in production.

Benefit for you:

You build real confidence in your skills and learn how to solve problems independently, turning you into a valuable, growth-ready developer rather than someone who just follows suggestions.

4. Refactor AI code

Mindset: Treat LLMs like an assistant, not a senior engineer.

Why it matters:

AI-generated code often works, but that doesn’t mean it’s clean or maintainable. It may repeat logic unnecessarily, violate naming conventions, or ignore the structure of your existing codebase. It lacks your team’s standards or your app’s long-term goals.

Benefit for you:

Refactoring turns functional but messy output into polished, professional-grade code. Over time, this also teaches you to recognize good architecture, simplify logic, and improve performance, all essential for becoming a senior developer.

AI tools like Gemini, GPT-4o, Claude, and others are changing how we code in PHP,but they’re not a replacement for real developer insight. Treat them like assistants, not architects. They’ll help you write faster, learn better, and debug smarter,if you stay in control.

Ashley is an esteemed technical author specializing in scientific computer science. With a distinguished background as a developer and team manager at Deloit and Cognizant Group, they have showcased exceptional leadership skills and technical expertise in delivering successful projects.

As a technical author, Ashley remains committed to staying at the forefront of emerging technologies and driving innovation in scientific computer science. Their expertise in PHP web development, coupled with their experience as a developer and team manager, positions them as a valuable resource for professionals seeking guidance and best practices. With each publication, Ashley strives to empower readers, inspire creativity, and propel the field of scientific computer science forward.

![How to use Cursor AI to write PHP Code[Example]](https://hirephpdeveloper.dev/wp-content/uploads/2025/03/How-to-use-Cursor-AI-to-write-PHP-Code-1-150x150.png)